Privacy-safe geo-targeting explained: coarse geofencing, anonymization, on-device processing, and sensitive-location exclusions to meet GDPR and CCPA.

Geo-targeting in Programmatic Digital Out-of-Home (pDOOH) advertising allows ads to be displayed based on location data, such as proximity to a store or local weather. While effective, this practice raises privacy concerns due to the potential misuse of precise location data, which can reveal sensitive personal details. To address these concerns, advertisers rely on methods like data aggregation, anonymization, and pseudonymization to protect individual privacy while complying with regulations like GDPR, CCPA, and CPRA.

Key takeaways:

Platforms like Enroute View Media demonstrate how privacy-compliant geo-targeting can deliver relevant ads by focusing on aggregated data and contextual signals instead of tracking individuals.

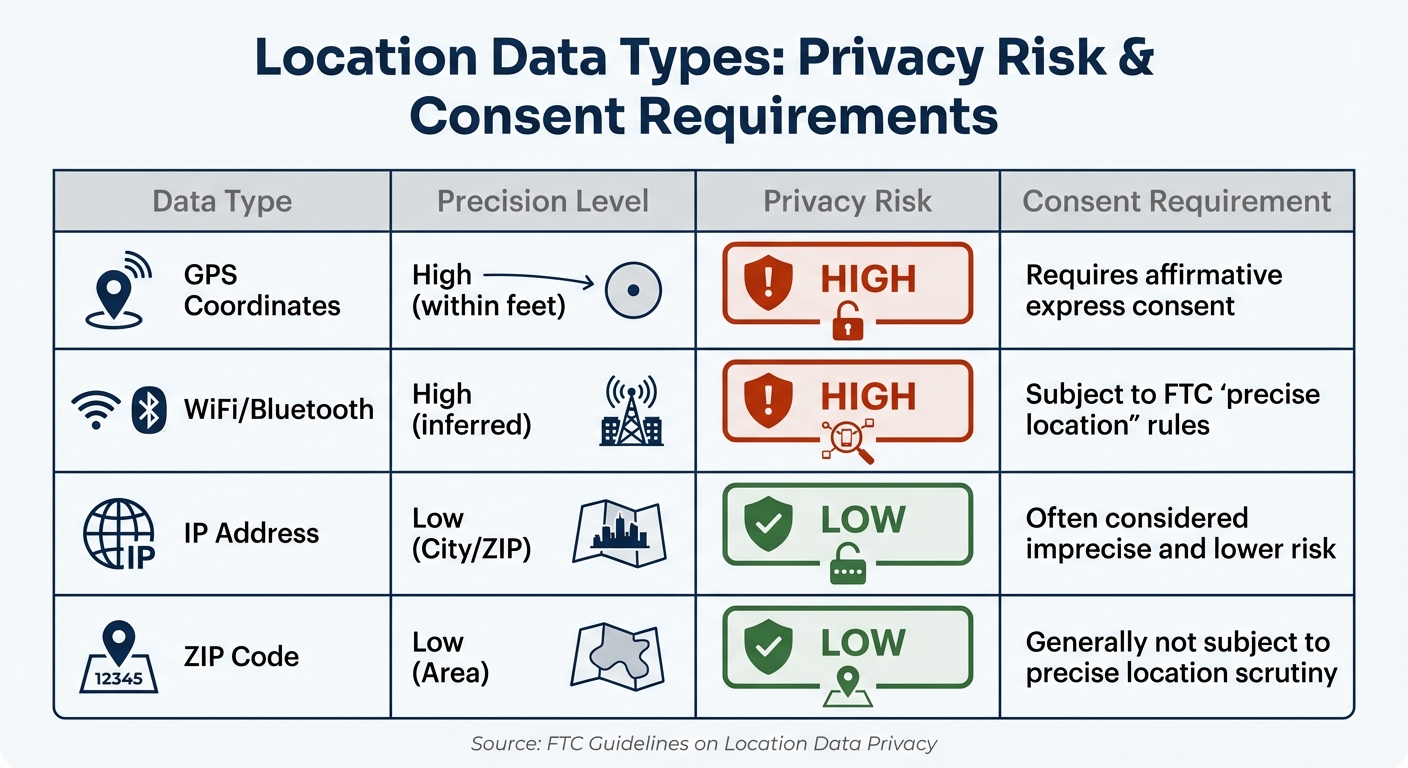

Location Data Types: Privacy Risk Levels and Consent Requirements

In the United States, laws like the CCPA, CPRA, and the Delete Act regulate how location data is used. The California Consumer Privacy Act (CCPA), later updated by the California Privacy Rights Act (CPRA), introduced new rules effective January 1, 2026. These updates address cybersecurity audits, risk assessments, and the use of automated decision-making technologies. On the same date, California also implemented the Delete Act, which mandates that data brokers participate in a centralized "Delete Request and Opt-out Platform" (DROP).

Other states, including Virginia, Colorado, Connecticut, Utah, and Iowa, have rolled out similar privacy frameworks. For instance, Iowa's SF262 also comes into effect on January 1, 2026. Virginia's Consumer Data Protection Act (VCDPA) applies to entities handling the personal data of at least 100,000 residents. These laws often require businesses to provide individuals with opt-out options for targeted advertising.

The Federal Trade Commission (FTC) has been actively enforcing location data privacy. In December 2024, the FTC reached a settlement with Mobilewalla, which had collected sensitive location data through ad exchanges and real-time bidding auctions without proper consent. This settlement prohibited the retention of such data for non-auction purposes. Similarly, in January 2024, the FTC settled with X-Mode Social Inc. (now Outlogic LLC), requiring the deletion of all previously collected sensitive location data.

Grasping these regulations is crucial for understanding the privacy-preserving techniques discussed later.

Techniques like pseudonymization, aggregation, and anonymization help reduce the risks of identifying individuals by altering or removing personal identifiers.

Privacy laws and regulators distinguish between "precise geolocation data" (like GPS coordinates accurate within a few feet) and "imprecise" data (such as city-level or ZIP code-based data from IP addresses). Using less precise data can reduce legal scrutiny and ease consent requirements. Anonymized and aggregated data often fall outside the legal definitions of "personal data" or "personal information", meaning they are not subject to opt-out requests or the "Do Not Sell or Share" requirements under the CCPA/CPRA.

State laws in Virginia, Colorado, and Connecticut classify targeted advertising as having a "heightened risk of harm". Aggregation and anonymization help mitigate these risks, particularly when dealing with sensitive locations like medical centers or places of worship. The FTC has been particularly focused on ensuring that individuals are not targeted based on their presence at such sensitive sites.

These privacy-preserving methods strike a balance between effective marketing and compliance, paving the way for a closer look at specific data types and their associated risks.

Not all location data is created equal when it comes to privacy risks. The FTC categorizes high-risk location data as "precise location" information, which includes GPS data, cell tower information, and data derived from WiFi or Bluetooth signals. Real-time tracking of movement poses a higher risk compared to static location points recorded at a specific time.

| Data Type | Precision Level | Privacy Risk | Consent Requirement |

|---|---|---|---|

| GPS Coordinates | High (within feet) | High | Requires affirmative express consent |

| WiFi/Bluetooth | High (inferred) | High | Subject to FTC "precise location" rules |

| IP Address | Low (City/ZIP) | Low | Often considered imprecise and lower risk |

| ZIP Code | Low (Area) | Low | Generally not subject to precise location scrutiny |

Data revealing visits to sensitive locations - such as medical facilities, places of worship, or schools - faces stricter rules. The FTC prohibits the sale or sharing of data that indicates someone’s presence at these locations. Advertisers must secure "affirmative express consent", which must be freely given, specific, informed, and unambiguous. They are also required to provide clear disclosures about the data being collected, its purpose, and who it will be shared with.

When it comes to privacy in digital out-of-home (DOOH) advertising, several technical strategies are in play to ensure user data remains protected.

Coarse geolocation works by grouping locations into broader areas - like neighborhoods, cities, or ZIP codes - rather than pinpointing exact GPS coordinates. For instance, Google restricts the precision of latitude and longitude to 0.01 degrees and includes an "accuracy" field that indicates the approximate radius of the location.

"In order to protect user privacy, Google only provides a coarse geolocation that is shared by a sufficiently large number of users, generalizing detected location as necessary." - Google Authorized Buyers

This approach allows advertisers to target broader regions without zeroing in on individual users, significantly lowering privacy risks.

Taking this a step further, on-device processing ensures even tighter control over sensitive data.

On-device processing keeps sensitive location data on the user's device, transmitting only anonymized or aggregated signals to external servers. Unlike server-based systems that handle raw data, this method processes information locally to filter out sensitive details before any data leaves the device. By applying privacy safeguards directly through edge processing, only non-identifiable data is shared.

In programmatic DOOH, this technique enables media players to react to real-time triggers - like shifts in weather or traffic - without needing to track individual devices. Essentially, the device acts as both the data source and processor, reducing the risk of data breaches and re-identification.

Contextual targeting takes a different route by using environmental signals - such as time of day, weather, traffic patterns, or venue type - instead of tracking individuals. For example, a DOOH screen near a gym might display fitness ads during peak hours, or an airport terminal screen could show travel-related content based on its location.

This method focuses on serving ads that align with the venue's characteristics rather than personal data. In practice, GPS data is often analyzed in aggregate to identify trends in consumer movement, ensuring privacy is upheld while still delivering relevant advertising.

Ensuring privacy compliance in geo-targeting campaigns goes beyond just picking the right tech tools. Advertisers and platforms must adopt operational safeguards to responsibly manage location data throughout its lifecycle - from collection to campaign execution.

Advertisers need to carefully evaluate their data providers to confirm that location data is collected lawfully and with proper consent. The Federal Trade Commission (FTC) has emphasized the importance of obtaining affirmative express consent - a clear, informed, and unambiguous agreement from users - before collecting location data. Past FTC cases, such as those involving InMarket Media LLC and X-Mode Social Inc., highlight the need for rigorous documentation of consent and the exclusion of sensitive location information.

"Affirmative express consent is a 'freely given, specific, informed, and unambiguous indication of an individual consumer's wishes demonstrating agreement by the individual'." – Federal Trade Commission (FTC)

When assessing data providers, advertisers should ensure they:

Providers should also have robust policies to exclude sensitive locations from their datasets and use coarse geolocation methods when precise data isn’t necessary. These practices help reduce privacy risks and align with broader compliance measures.

Once data practices are verified, advertisers must take proactive steps to exclude high-risk locations from their campaigns. Certain places - like religious institutions, healthcare facilities, military bases, correctional facilities, and schools - pose greater privacy concerns and should be avoided in targeting efforts.

To identify these sensitive locations, advertisers can use tools like the North American Industry Classification System (NAICS). Additionally, as of October 2024, companies such as Cuebiq, Foursquare, InMarket, Outlogic, and Precisely PlaceIQ have voluntarily adopted stricter standards, prohibiting the use or sale of precise location data tied to these sensitive areas.

Many platforms provide "Exclusion Targeting" settings, which allow advertisers to omit specific geographic areas from their campaigns while targeting other locations. Internal policies should ensure that individuals are never categorized or targeted based on their presence at these sensitive points of interest.

Transparent privacy notices are not just a best practice - they are legally required. These notices must be clear and noticeable, using text size, contrast, and placement to ensure they aren’t buried in lengthy terms of service.

| Notice Requirement | Description |

|---|---|

| Information Categories | Specify the types of data being collected (e.g., GPS, IP, WiFi). |

| Purpose of Use | Explain if the data is used for advertising, analytics, or shared with third parties. |

| Entity Identification | Name the companies or types of entities receiving the data. |

| Withdrawal Mechanism | Provide a direct link for users to easily opt out. |

| Conspicuousness | Ensure the notice is prominent through text size, color, and contrast. |

In addition to clear disclosures, platforms should implement technical measures like on-device processing and data minimization. For example, reducing coordinate precision to 0.01 degrees can ensure location data applies to larger groups, minimizing the risk of identifying individuals. Combining these measures with documented consent practices creates a strong foundation for privacy compliance.

Enroute View Media’s taxi and rideshare advertising platform showcases a privacy-conscious approach to geo-time targeting. By using contextual triggers like specific routes, zones, time of day, and even local weather conditions, the system activates ads on rooftop LED screens and in-taxi displays. Crucially, this method avoids personal identifiers, ensuring passengers stay anonymous while still receiving relevant advertisements. Let’s take a closer look at how this system balances privacy and targeted advertising.

Rather than tracking individual passengers, the platform uses aggregate GPS data to identify movement patterns at a group level. By relying on generalized geolocation data, it triggers ads when vehicles enter specific areas, without creating individual profiles or exposing detailed travel histories to advertisers. This approach ensures privacy is maintained while delivering location-relevant messages.

The platform provides advertisers with real-time analytics based on aggregated, de-identified data. These reports include metrics like ad impressions, exposure rates, and campaign performance, all without accessing raw device location histories. As a GDPR-compliant data processor, Enroute View Media strictly uses this information for measuring and delivering ads, avoiding the storage of unnecessary personal details.

This focus on aggregated data not only safeguards privacy but also equips advertisers with actionable insights. It’s a practical way to manage campaigns effectively while respecting user anonymity.

Enroute View Media goes a step further by offering advertisers advanced privacy controls. With tools for zone management, advertisers can exclude sensitive locations - such as hospitals, schools, and places of worship - from their targeting parameters. Additionally, the platform minimizes data use by limiting coordinate precision and applying truncated identifiers to obscure exact locations.

These features address the challenge of balancing precision with privacy. The platform’s white-labeled solutions also allow fleet owners and marketing startups to customize data retention settings and include privacy notices that align with regulatory standards. This flexibility ensures campaigns remain compliant with evolving privacy laws, including California’s Delete Act, which takes effect in January 2026.

Achieving geo-targeting that respects privacy isn't just possible - it's quickly becoming the norm. At its heart lies data minimization - collecting only the information needed for a specific campaign or ad auction. This means relying on aggregated GPS data to analyze group movement patterns instead of tracking individuals’ detailed travel histories.

Three key principles shape this approach:

These principles don’t just set the tone for ethical data collection - they also guide the development of secure advertising technologies. Protecting sensitive locations is another critical piece of the puzzle. Platforms must enforce strict measures to avoid collecting or sharing data tied to places like healthcare facilities, religious institutions, or political events. For example, the FTC recently penalized a data broker for retaining information from lost ad auctions, emphasizing the importance of strict compliance.

Together, these principles and practices create a framework for balancing precision in geo-targeting with the need to safeguard user privacy.

Platforms such as Enroute View Media illustrate how privacy-safe geo-targeting can be achieved in practice. By leveraging contextual triggers - like weather, time of day, or specific geographic zones - they activate ads without relying on individual profiles. Their tools also allow advertisers to exclude sensitive locations, while using truncated data and limited coordinate precision to avoid pinpointing exact user locations.

The results speak for themselves. Numerous programmatic DOOH campaigns have shown measurable increases in purchase intent, brand consideration, and foot traffic - all while adhering to strict privacy standards. These successes highlight the effectiveness of targeting broader movement trends and environmental factors, rather than focusing on individuals.

The move toward privacy-safe geo-targeting isn’t just about meeting regulatory requirements - it’s a smart, forward-thinking approach. Advertisers who embrace these practices today are setting themselves up for success in a world that values both precision and privacy.

Geo-targeting respects privacy by focusing on user consent and maintaining transparency. Before any location data is collected, users are notified with clear explanations and must actively opt in. To minimize privacy risks, only general or aggregated location data (like IP-based estimates) is used, steering clear of collecting exact details unnecessarily.

Privacy is further safeguarded with simple opt-out options, allowing users to withdraw their consent whenever they choose. These measures comply with GDPR’s rules for lawful data handling and consent, as well as CCPA’s focus on providing notice and the right to opt out of data collection.

When it comes to protecting privacy, location data undergoes anonymization through methods like differential privacy. This technique introduces statistical noise, effectively masking individual details. Beyond that, the data is grouped into larger geographic regions or broader time periods, ensuring individual identities remain untraceable. To add another layer of security, only generalized location details - such as approximate geolocation based on IP addresses - are used, safeguarding user privacy even further.

Advertisers can uphold privacy standards by deliberately excluding sensitive locations - like hospitals, places of worship, and military bases - from their geo-targeting efforts. This is typically done using classification systems such as NAICS, which help identify these sensitive points of interest (POIs) and ensure they are added to exclusion lists.

By steering clear of these areas, advertisers avoid using precise location data where privacy concerns are more pronounced. Aligning with established privacy guidelines, such as those set by the NAI, supports ethical advertising practices while safeguarding user privacy.

Need pricing details?